Openstack Services timeout on Rabbimq

There is one of weird issue that our team had observed on the Rabbitmq, which keeps causing timeout from client side of Openstack services. We wil take Nova as an example

After restart a Rabbitmq host from an old Queen-version cluster, we can see all of our nova-compute services are down. Tracking the nova-compute.log, the error line looks something like this:

2024-04-04 00:01:49.109 52082 CRITICAL nova [req-d83c8f52-554f-442e-a41b-4889ee161bb5 - - - - -] Unhandled error: MessagingTimeout: Timed out waiting for a reply to message ID ecddcc092b264d48a000b1d191cca6c8

...

2024-04-04 00:01:49.109 52082 ERROR nova MessagingTimeout: Timed out waiting for a reply to message ID ecddcc092b264d48a000b1d191cca6c8

At first it's weird althought our nova-controller services are fine. Because there is not much information on the log, we added more verbose option in nova.conf

[DEFAULT]

...

debug = True

default_log_levels = amqp=DEBUG, amqplib=DEBUG, boto=WARN, qpid=WARN, sqlalchemy=WARN, suds=INFO, oslo.messaging=DEBUG, oslo_messaging=DEBUG, iso8601=WARN, requests.packages.urllib3.connectionpool=WARN, urllib3.connectionpool=WARN, websocket=WARN, requests.packages.urllib3.util.retry=WARN, urllib3.util.retry=WARN, keystonemiddleware=WARN, routes.middleware=WARN, stevedore=WARN, taskflow=WARN, keystoneauth=WARN, oslo.cache=INFO, dogpile.core.dogpile=INFO

...

After that we can see more logs. Looks like the nova-compute client already prepared the message but cannot send to the queue

2024-04-04 00:00:49.106 52082 DEBUG kombu.channel [req-d83c8f52-554f-442e-a41b-4889ee161bb5 - - - - -] [Kombu channel:1] exchange_declare(nowait=False, exchange='nova', durable=False, passive=False, arguments=None, type='topic', auto_delete=False) __wrapped /openstack/venvs/nova-17.0.8/lib/python2.7/site-packages/kombu/utils/debug.py:54

2024-04-04 00:00:49.107 52082 DEBUG kombu.channel [req-d83c8f52-554f-442e-a41b-4889ee161bb5 - - - - -] [Kombu channel:1] prepare_message('{"oslo.message": "{\\"_context_domain\\": null, \\"_msg_id\\": \\"ecddcc092b264d48a000b1d191cca6c8\\", \\"_context_global_request_id\\": null, \\"_context_quota_class\\": null, \\"_context_read_only\\": false, \\"_context_request_id\\": \\"req-d83c8f52-554f-442e-a41b-4889ee161bb5\\", \\"_context_service_catalog\\": [], \\"args\\": {\\"object_versions\\": {\\"ComputeNode\\": \\"1.18\\", \\"PciDevicePoolList\\": \\"1.1\\", \\"PciDevicePool\\": \\"1.1\\", \\"Service\\": \\"1.22\\", \\"HVSpec\\": \\"1.2\\"}, \\"objmethod\\": \\"get_minimum_version\\", \\"args\\": [\\"nova-compute\\"], \\"objname\\": \\"Service\\", \\"kwargs\\": {}}, \\"_unique_id\\": \\"88b69850b7ed42bc97f91b9eb952b967\\", \\"_context_resource_uuid\\": null, \\"_context_instance_lock_checked\\": false, \\"_context_is_admin_project\\": true, \\"_context_user\\": null, \\"_context_user_id\\": null, \\"_context_project_name\\": null, \\"_context_read_deleted\\": \\"no\\", \\"_context_user_identity\\": \\"- - - - -\\", \\"_reply_q\\": \\"reply_9ddda42c82894d66848c172f54c2abdd\\", \\"_context_auth_token\\": null, \\"_context_show_deleted\\": false, \\"_context_tenant\\": null, \\"_context_roles\\": [], \\"_context_is_admin\\": true, \\"version\\": \\"3.0\\", \\"_context_project_id\\": null, \\"_context_project_domain\\": null, \\"_context_timestamp\\": \\"2024-04-03T17:00:49.023334\\", \\"_context_user_domain\\": null, \\"_context_user_name\\": null, \\"method\\": \\"object_class_action_versions\\", \\"_context_project\\": null, \\"_context_remote_address\\": null}", "oslo.version": "2.0"}', 0, u'application/json', u'utf-8', {}, {u'delivery_mode': 2, u'expiration': '60000'}) __wrapped /openstack/venvs/nova-17.0.8/lib/python2.7/site-packages/kombu/utils/debug.py:54

2024-04-04 00:00:49.107 52082 DEBUG kombu.channel [req-d83c8f52-554f-442e-a41b-4889ee161bb5 - - - - -] [Kombu channel:1] basic_publish_confirm(<amqp.basic_message.Message objectat 0x7f3be2f4ea50>, mandatory=False, routing_key='conductor', immediate=False, exchange='nova') __wrapped /openstack/venvs/nova-17.0.8/lib/python2.7/site-packages/kombu/utils/debug.py:54

We did try to restart (which solved almost of the system problem), but it doesn't work. After hours of doing "trial and error", we decide to remove the /nova vhost of Rabbitmq and recreated it.

$ rabbitmqctl list_users

Listing users

cinder []

magnum []

heat []

neutron []

openstack []

keystone []

admin [administrator]

glance []

nova []

octavia []

$ rabbitmqctl list_vhosts

Listing vhosts

/neutron

/heat

/magnum

/keystone

/

/nova

/nova

/glance

/octavia

$ rabbitmqctl list_permissions -p /nova

Listing permissions in vhost "/nova"

nova .* .* .*

$ rabbitmqctl list_policies -p /nova

Listing policies

$ rabbitmqctl delete_vhost /nova

Deleting vhost "/nova"

$ rabbitmqctl add_vhost /nova

Creating vhost "/nova"

$ rabbitmqctl set_permissions nova ".*" ".*" ".*" -p /nova

Setting permissions for user "nova" in vhost "/nova"

On line 1, 14, 26, 30, we get the baseline before actually modifying the current system. After that we recreate vhost /nova and assign the corresponing permisison for the existing user (line 33, 36, 39). After that nova-compute services are up again.

Recreate vhost is a destructive action. Please do it with care especially for crucial system like billing.

Recheck all the nova services, we can see its output lots of log like this:

2024-04-04 11:38:23.462 13842 ERROR oslo.messaging._drivers.impl_rabbit [req-9a1cca89-50e1-4017-9d72-53a444dba892 - - - - -] [4c7d5a68-2d6c-4239-b0b5-88f76b29c3a3] AMQP server on x.x.x.x:5671 is unreachable: . Trying again in 1 seconds. Client port: 51448: timeout

Somethings got timeout?

Dig deeper into the rabbitmq logs

=ERROR REPORT==== 2-Apr-2024::11:54:07 ===

Channel error on connection <0.4370.2> (a.b.c.d:54904 -> x.x.x.x:5671, vhost: '/nova', user: 'nova'), channel 1:

operation basic.publish caused a channel exception not_found: no exchange 'reply_a06c4c2a39a340a6ba4680f0d3575a00' in vhost '/nova'

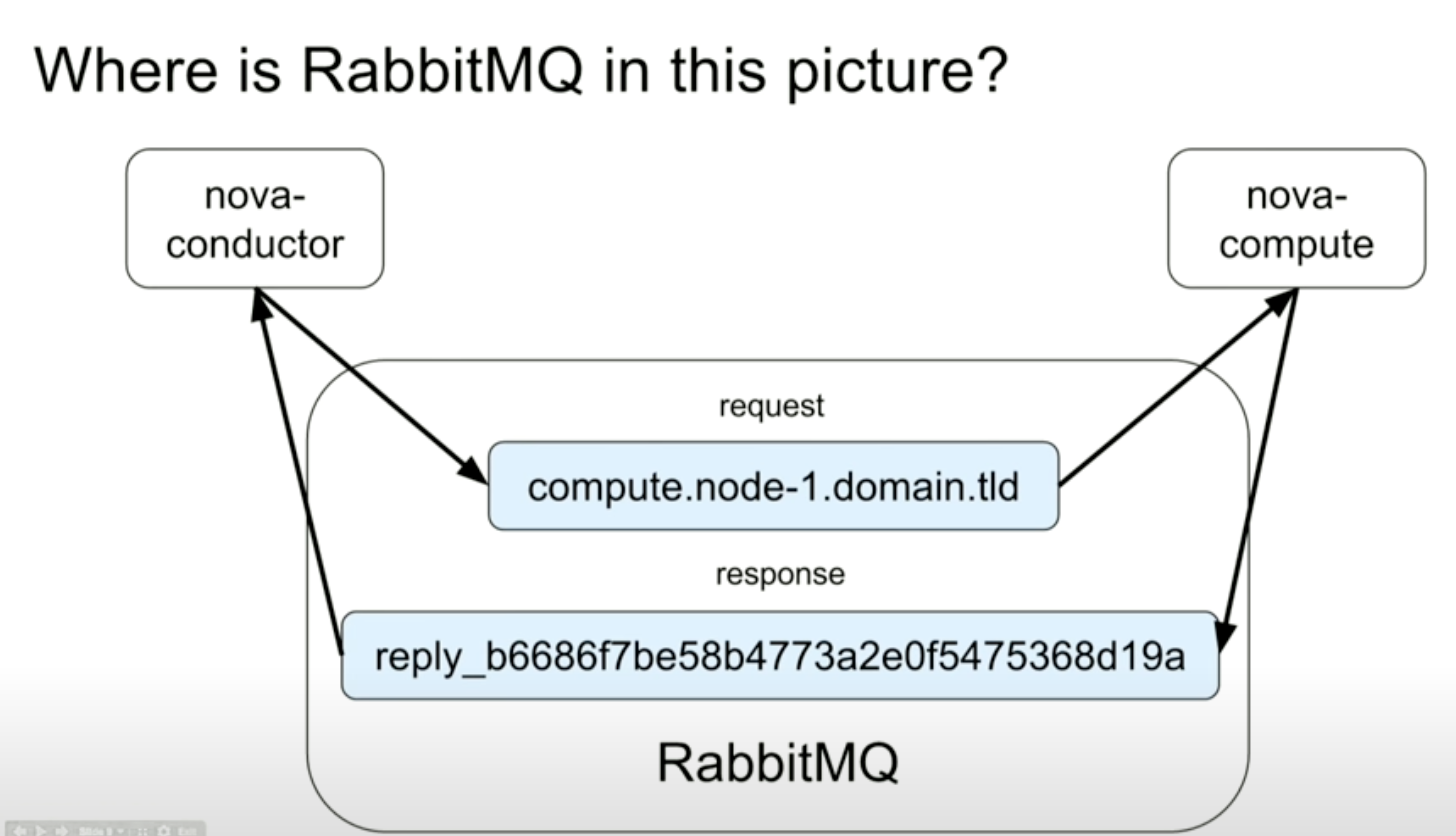

Error indicates that the exchange is not found, but who should create it? Let's review a bit the relation between rabbitmq within nova-conductor and nova-compute

We can see that the nova-conductor will send the message directly to the queue and the compute node will consume it. In return, if the nova-compute needs to reply back to the conductor, it will use a queue with prefix reply_. So to anwser our above question, the nova-compute nodes are responsible for the reply_ queues' recreation.

Restart all the nova-compute services and everythings look fine.